Reforms supporting success – enabling project success through good assurance

Since 2021, the Australian Government has invested in strengthening central oversight of digital projects. This central oversight works to ensure best practice is systematically applied as digital projects are designed and delivered across agencies. By driving the adoption of best practice, central oversight plays a key role in giving each digital project the best chance of success.

The Assurance Framework for Digital and ICT Investments mandates global best practice in the use of assurance for digital projects. While assurance doesn’t in itself deliver outcomes, effective assurance is critical to good governance and decision-making. All projects in this report are subject to the Assurance Framework and must apply its ‘key principles for good assurance’. These principles draw on global best practice and, when applied effectively, provide confidence that digital projects will achieve their objectives, without leading to excessive levels of assurance.

The Assurance Framework also includes escalation protocols to support agencies to resolve delivery challenges digital projects might encounter. Central oversight of assurance also ensures that lessons learned from across digital projects are systematically incorporated into the design and delivery of future projects to reduce the risk of delivery issues arising in future.

Reforms supporting success – ensuring digital projects are well designed

The DTA works with agencies to ensure robust and defensible proposals for spending on all new digital projects.

Each proposal must align with the government’s strategies, policies and best practice digital standards as part of the Digital Capability Assessment Process (DCAP).

For complex, high-risk and high-cost digital projects, the DTA offers additional support through the ICT Investment Approval Process (IIAP). This involves working with agencies to develop and mature implementation planning to support success. A comprehensive business case must clearly demonstrate the need for funding, based on thorough policy development, a well-planned approach to delivery and mechanisms for reviewing project progress. This process aids government decision-making on whether to fund large and complex digital project proposals.

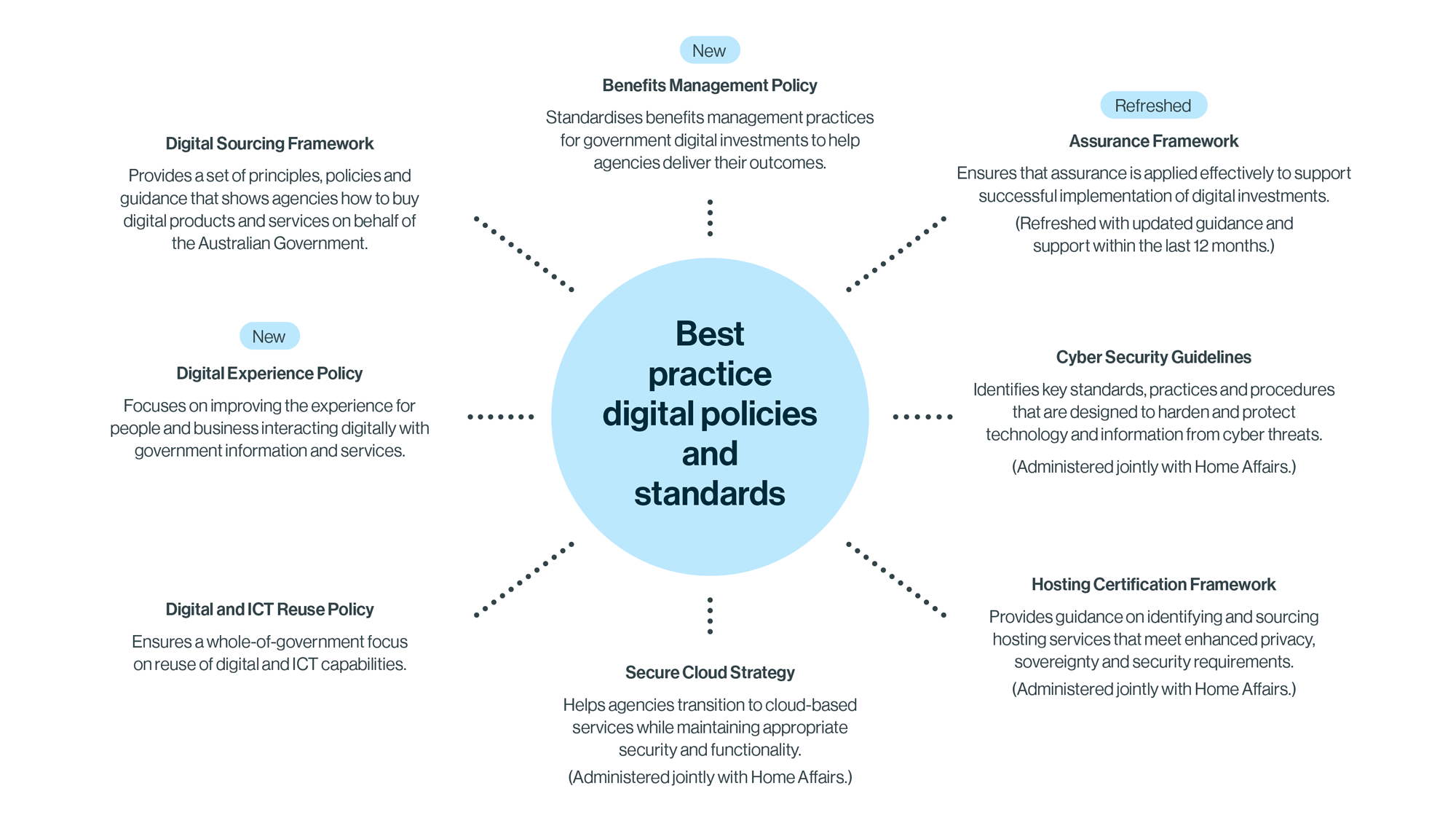

The DTA’s digital policies and standards codify best practice and ensure digital projects are positioned to succeed

The policies that apply to digital projects in the Australian Government are constantly being reviewed and updated. This is necessary to ensure they best support agencies in delivering the world-class data and digital capabilities needed to support the missions set out in the Data and Digital Government Strategy.

Image description

The image shows a central circle with lines leading off to the subheadings.

Image headline (in the centre): 'Best Practice Digital Policies and Standards'

Sub headings:

- Secure Cloud Strategy

- Helps agencies transition to cloud-based services while maintaining appropriate security and functionality.

- (Administered jointly with Home Affairs)

- Hosting Certification Framework

- Provides guidance on identifying and sourcing hosting services that meet enhanced privacy, sovereignty and security requirements.

- (Administered jointly with Home Affairs

- Cyber Security Guidelines

- Identifies key standards, practices and procedures that are designed to harden and protect technology and information from cyber threats.

- (Administered jointly with Home Affairs).

- Assurance Framework (Refreshed)

- Ensure that assurance is applied effectively to support successful implementation of digital investments.

- (Refreshed with updated guidance and support within the last 12 months)

- Benefits Management Policy (New)

- Standardises benefit management practices for government digital investments to help agencies deliver their outcomes.

- Digital Sourcing Framework

- Provides a set of principles, policies and guidance that shows agencies how to buy digital products and services on behalf of the Australian Government.

- Digital Experience Policy (New)

- Focuses on improving the experience for people and businesses interacting digitally with government information and services.

- Digital and ICT Reuse Policy

- Ensures a whole of government focus on reuse of digital and ICT capabilities.

Reforms supporting success – improving benefits management capability

The Australian Government’s Benefits Management Policy for Digital and ICT-Enabled Investments requires agencies to use best practice benefits management for their digital projects. Projects must identify measurable benefits with clear baselines and targets before funding decisions are taken. The minimum policy requirements are adjusted based on project tier, but all projects must focus on securing benefits in addition to preventing cost and schedule overruns.

The DTA oversees the realisation of benefits and identifies emerging risks across digital projects. We also focus on providing advice, support and training to improve public service capabilities.

Investment in this area aims to ensure that digital projects deliver anticipated benefits to the government and Australians.

Image description

Diagram headline: “Active projects by tier, budget and average duration”.

The diagram demonstrates the increase in total number and budget of projects under assurance oversight from February 2024 versus February 2025. February 2024 shows 89 active projects worth a total of $6.2 billion with all projects continuing under oversight. February 2025 shows 110 total active projects broken up into 1 paused project, 32 projects that left oversight, 56 projects that are continuing and 54 projects which joined, all worth a total of $12.9 billion.

OffMost projects coming under central assurance oversight in the past year have been Tier 2 and Tier 3 level investments

| Investment tier Projects | Total budget | Median total budget | Average duration | |

|---|---|---|---|---|

| 1 Flagship digital investments |

9 |

$1.3 billion |

$154.7 million |

3.2 years |

| 2 Strategically significant digital investments |

20 |

$5.7 billion |

$58.8 million |

3.6 years (see the table note) |

| 3 Significant digital investments |

25 |

$1.4 billion |

$24.0 million |

2.2 years |

Table note: Tier 2 average project duration is affected by 2 outlier projects, each with a duration of 35.0 years. Average duration including these 2 projects is 6.9 years.

Tier 3 projects made up the largest number of additional projects. These projects are usually lower risk and have smaller budgets, with most investing in ‘sustainment’ and ‘product/service enhancement’ rather than establishing wholly new digital capabilities. The increase in Tier 3 investments reflects ongoing efforts to move away from higher-risk large and complex projects to smaller, ‘bite-size’ projects where possible. Evidence suggests these smaller projects generally have a higher rate of success.

Large investments will still be necessary in some cases, and several have been commissioned since the last report. Strong planning and oversight are crucial to ensure new higher-risk investments do not exceed available delivery capacity. Strengthening central oversight, including digital investment planning and prioritisation, is key to balance project loads within capacity and coordinate efforts to expand capabilities of agencies and delivery partners to handle expected growth in digital investment.

Reforms supporting success – planning for the future

From the 2026–27 Budget, Commonwealth agencies will be required to develop digital and ICT investment plans. This will provide a future-focused understanding of the complexities across the government’s digital and ICT landscape and identify future need for investment in digital services.

Digital and ICT investment plans will provide short, medium and long-term views of projects. This will help to balance capacity, instil a culture of strategic digital investment planning focused on the future, improve understanding of criticality and risk, and support long-term ambitions to achieve better digital outcomes for Australians as part of the Data and Digital Government Strategy. The investment plans will also increase visibility of digital investments across agencies, enabling the trial and adoption of new technologies, greater coordination of digital enhancements, and more integrated service delivery across agencies.

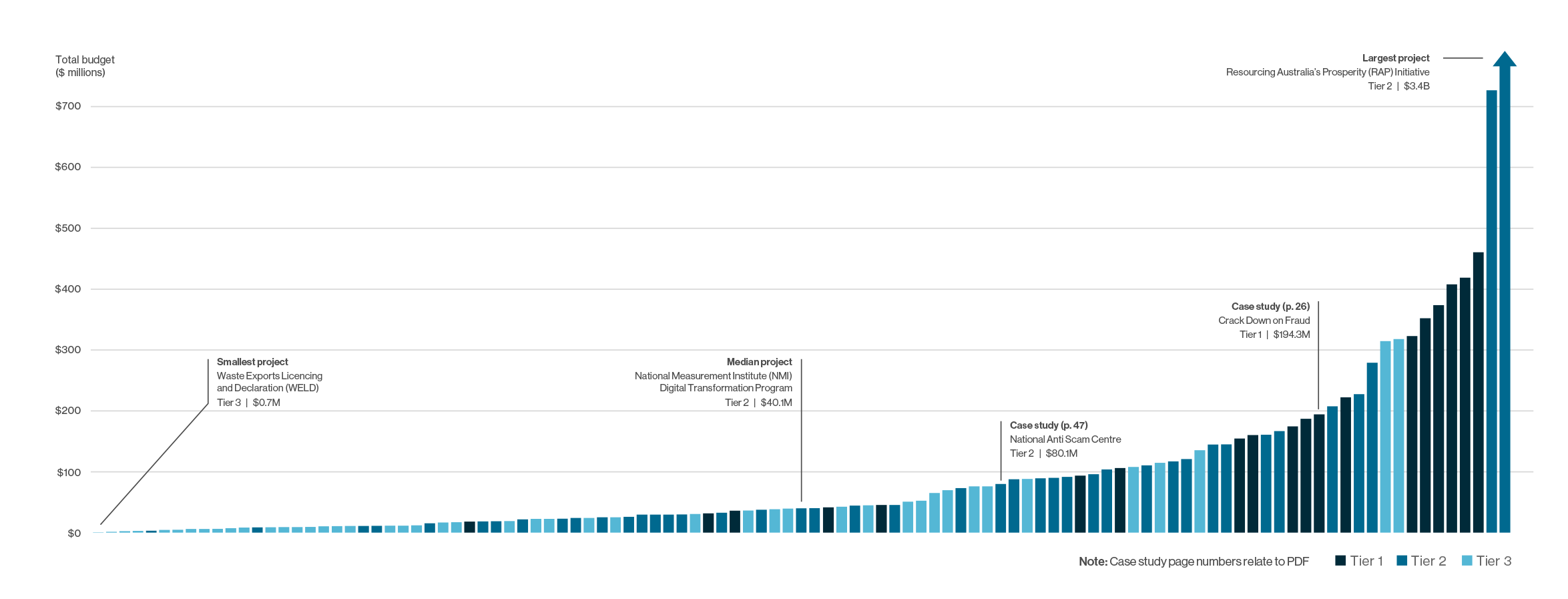

The distribution of project by total budget highlights the diversity of projects underway across the Australian Government

Image description

The chart shows the distribution of projects by total budget, in order of smallest to largest budget, broken into Tiers 1, 2 and 3.

The smallest project is Tier 3; Waste Exports Licensing and Declaration (WELD), $0.7 million.

The median project is Tier 2; National Measurement Institute (NMI) Digital Transformation Program, $40.1 million.

The case study on page 47, is Tier 2, National Anti-Scam Centre, $80.1 million.

The case study from page 26, is Tier 1, Crack Down on Fraud, $194.3 million.

The chart indicates the range of projects with the largest project being Tier 2; Resourcing Australia’s Prosperity (RAP) Initiative, $3.4 billion.

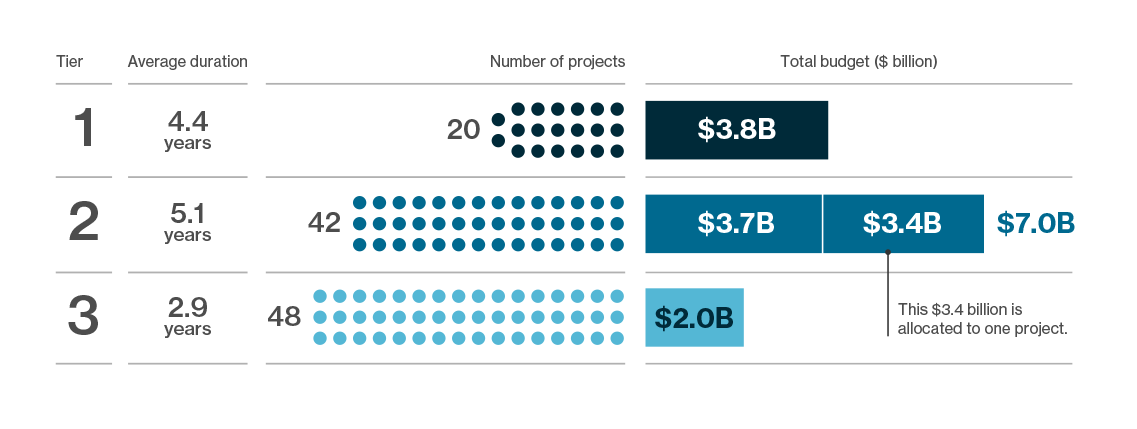

OffTier 2 and Tier 3 projects make up the bulk of all active projects under central assurance oversight

Image description

Diagram headline: 'Active projects by tier, budget and average duration'.

Diagram demonstrates active projects by tier, budget and average duration.

20 tier 1 projects have a total budget of $3.8 billon, with an average duration of 4.4 years.

42 tier 2 projects have a total of $7 billion, this is broken up into $3.4 billion for one project, with $3.7 billion spread across the remaining 41 projects and an average duration of 5.1 years.

48 tier 3 projects have a total budget of $2 billion and an average duration of 2.9 years.

OffOf note, almost half of the total budget of all Tier 2 projects relates to a single, multi-decade investment, valued at $3.4 billion. This outlier project skews the average budget of Tier 2 projects ($167.8 million) and means the median budget of $45.0 million better reflects their typical size.

Flagship digital investments (Tier 1) represent 18.2% of active projects and approximately 29.6% of the total budget. Just over half of all Tier 1 projects are reporting a planned completion date between June 2025 to June 2026. Strong ongoing investment planning and prioritisation within each of these projects will be essential for Senior Responsible Officials to smoothly deliver these projects over the next 12 months.

For all tiers, experience shows that the projects most likely to deliver expected benefits on time and on budget have robust approaches to key project management disciplines including governance, risk, benefits and assurance. Strengthening approaches in these areas is a priority for the DTA in our work overseeing all the digital projects included in this report.

Case study: Social services

National Disability Insurance Agency (NDIA): Preventing disability insurance fraud

At a glance

Making it easier to get it right and harder to get it wrong

Tier 1

Investment

Tranche 1 (2024)

$83.9 million

Tranche 2 (2025)

$110.4 million

Benefits

Improved integrity and security

$200 million in savings

$400 million redirected from dishonest providers

Summary

The Crack Down on Fraud (CDoF) Program is enhancing the NDIA’s capabilities by streamlining processes and improving ICT systems to detect and prevent fraud and non-compliance.

On 18 February 2024, the government announced initial Tranche 1 funding of $83.9 million to strengthen the National Disability Insurance Scheme (NDIS) and make sure every dollar is going to participants who need it.

The CDoF Program has immediately addressed emerging and high-risk issues identified by the Fraud Fusion Taskforce (FFT) – a partnership between 19 Commonwealth agencies, co-led by the NDIA and Services Australia.

It is improving:

- systems that assess, process and pay over 400,000 NDIS claims per day

- systems that check identities to increase participant safety and privacy

- the my NDIS app and NDIS portals.

In addition, the program is building:

- new ICT systems to connect with other agencies, providers and banks so claims and payments can be done faster with less errors

- a new fraud investigation system that will connect with other enforcement agencies.

Saving millions and enhancing security

The program has quickly improved validating and substantiating payments and enhanced pre-payment integrity, ahead of introducing long-term technical solutions. By 30 June 2024, these improvements had enabled over $200 million in savings from non-compliant claims with a further $400 million forecast to be diverted from dishonest providers to genuine disability supports and services.

The CDoF Program has also enhanced identity integrity and provided a consistent, secure experience for accessing NDIS digital platforms via myGov. In its first year, the program delivered a data lake for fraud detection, an integrity management system for investigations, the first phase of a new API Gateway for easier provider interaction with NDIA and stronger cyber event detection. Where appropriate, strategies included leveraging whole-of-government arrangements and reusing existing solutions.

Helping to transform lives

"The NDIS is absolutely transforming the lives of people with disability. It represents the inclusive spirit of our Australian community. The system uplifts being delivered by the talented teams in the CDoF Program will ‘make it easier to get it right and harder to get it wrong’, thus protecting NDIS participants and ensuring the sustainability of the scheme."

Martin Mane,

General Manager Integrity Transformation, NDIA

The program is being delivered in parallel to the most significant legislative reforms since the NDIS started. Participants remain at the heart of this transformation, and the program is working with the disability community to deliver valuable changes.

OffImproving transparency for Australians on the performance of digital projects

In recent years, the Australian Government has actively invested not just in new digital projects but in understanding what projects are underway and how they can best be supported to succeed. Transparency is an essential ingredient for good governance and this section sets out the improvements which have been made to ensure Australians know how their digital projects are performing.

Almost all Tier 1 and 2 projects now have delivery confidence assessments

Tier 1 and 2 digital projects must undertake regular delivery confidence assessments (DCAs) under the Assurance Framework for Digital and ICT Investments. DCAs indicate how likely a project is to meet its objectives at a given point in time. DCA ratings range from High to Low (see Appendix for details).

A lower DCA rating signals issues or risks that need to be addressed. However, a low rating does not necessarily mean a project will fail. Instead, it’s an early warning system that allows for timely interventions to support project teams in mitigating risks and overcoming challenges. By taking the right steps, projects can recover from lower delivery confidence ratings and go on to deliver expected outcomes for Australians on budget and on schedule.

The DTA plays a crucial role in this process. When delivery confidence decreases, we work closely with agencies to make sure they take the right measures. This involves:

- providing guidance, resources and support to project teams

- facilitating the best use of assurance processes

- promoting strategies to address issues as early as possible, when course corrections are more likely to succeed.

Ultimately, this collaborative effort aims to enhance the likelihood of successful project delivery, ensuring that investments provide expected benefits to Australians and businesses.

Since the introduction of the Australian Government’s Digital and ICT Investment Oversight Framework in November 2021, there has been a concerted focus on increasing understanding of how digital projects are performing, as well as the conditions that need to exist to best support their success.

In the last report in February 2024, 52.1% of Tier 1 and 2 projects included a delivery confidence assessment. In February 2025, this has increased to 98.4%.

Transparency and understanding of project performance is increasing

Efforts have focused on improving the availability and quality of DCAs. These are conducted by skilled independent assurers whenever possible to ensure an objective perspective. In this report, 80.3% of assessments were completed by independent assurers under the Assurance Framework, with 90.0% of Tier 1 projects meeting this standard. The remaining delivery confidence ratings reflect self-assessments by the relevant agency.

Change in delivery confidence ratings over 12 months

Image description

Diagram headline: 'Change in delivery confidence ratings over 12 months'.

The diagram demonstrates the variation between delivery confidence ratings over the 12-month period from February 2024 to February 2025. It is stated that transparency and understanding of project performance is increasing.

In February 2024, 3 projects had high delivery confidence, 12 had medium-high, 7 had medium, 3 had medium-low and 23 projects did not have delivery confidence ratings available.

In February 2025, 8 projects had high delivery confidence, 30 had medium-high, 14 had medium, 7 had medium-low, 2 had low and 1 project had an unavailable DCA as it experienced a delayed commencement, with a DCA to be conducted shortly.

Off80.3% independent assessments

In this report, 80.3% of assessments were completed by independent assurers under the Assurance Framework, with 90.0% for Tier 1 projects meeting this standard. This independence is key to ensuring often complex and challenging digital projects receive the expert, objective advice they need to succeed.

Reforms supporting success – Bringing objectivity and rigour to assessing delivery confidence

Delivery confidence assessments are vital for directing effort and support to where it is most needed to ensure the success of all the Australian Government’s digital projects. Therefore, these assessments must be objective and rigorous.

In 2024, the University of Sydney’s John Grill Institute of Project Leadership worked in collaboration with the DTA to prepare best practice guidance on assessing the delivery confidence of digital projects. This guidance identifies the factors that are most significant in the success and failure of digital projects, and sets out how they should be considered when forming an assessment.

This section sets out how digital projects are performing. Digital projects present unique challenges and the reforms set out in previous sections are playing a key role in ensuring the conditions exist for each and every project included in this report to succeed.

How the Australian Government’s digital projects are performing

This section sets out how digital projects are performing. Digital projects present unique challenges and the reforms set out in previous sections are playing a key role in ensuring the conditions exist for each and every project included in this report to succeed.

Projects worth $7.3 billion of total budget are on-track

Consistent DCAs for major digital projects provide an overview of each project’s performance, spotlighting where support is needed most. This transparency also aids in reforms aimed at creating optimal conditions for digital projects to succeed and enhance public services and people’s lives.

Across the 2 years of reporting, the DCAs show many projects in the portfolio are on track to deliver agreed outcomes. This reflects the easing of technology supply disruptions related to the COVID-19 pandemic and the ongoing investment in strengthening how the Australian Government designs and delivers its digital projects.

Total number and budget of projects in each delivery confidence rating category (Tier 1 and Tier 2)

Image description

Diagram headline: 'Total number and budget of projects in each delivery confidence rating category, by Tier 1 and Tier 2'

The diagram demonstrates that Tier 1 projects have:

- No projects with high delivery confidence rating

- 11 projects with Medium-High delivery confidence and a total budget of $1.5 billion

- 5 projects with Medium delivery confidence and a total budget of $1.0 billion

- 2 projects with Medium-Low delivery confidence and a total budget of $0.7 billion

- 2 projects with Low delivery confidence and a total budget of $0.5 billion

- No projects had an Unavailable delivery confidence

Tier 2 projects have:

- 8 projects with High delivery confidence and a total budget of $0.5 billion

- 19 projects with Medium-High delivery confidence and a total budget of $5.3 billion, $3.4 billion of this comprising the Resourcing Australia’s Prosperity (RAP) Initiative project, with the remaining 18 projects comprising $2.0 billion.

- 9 projects with Medium delivery confidence and a total budget of $0.6 billion

- 5 projects with Medium-Low delivery confidence and a total budget of $0.6 billion

- No projects had a Low delivery confidence

- 1 project had an Unavailable delivery confidence with a total budget of $18.9 million.

Changes in delivery confidence

New major digital projects are generally starting off well with more than three-quarters reporting High or Medium-High delivery confidence.

The government’s digital projects are being delivered against a backdrop of rapid and continuous technological change. This dynamic environment is reflected in the changes in delivery confidence ratings over the past year as projects move through different stages in their development.

Understanding overall changes in delivery confidence to target engagement and reforms

Most (75.9%) of the 29 Tier 1 and 2 projects entering oversight since February 2024 report a High or Medium-High delivery confidence. These projects commonly report factors contributing to their delivery confidence rating at the start as: establishing effective governance early; having well-prepared documentation and artefacts; and ensuring experienced and capable personnel were ready.

This is an early sign that investment to strengthen digital project design processes is increasing overall delivery confidence. Projects often start with lower levels of delivery confidence, but the recent emphasis on ensuring mature planning is in place before projects start appears to be paying dividends, with more than three-quarters of these new projects entering oversight reporting High or Medium-High confidence. This contrasts with the United Kingdom where ‘it is not unusual for projects to be rated as Red earlier in their lifecycle, when scope, benefits, costs and delivery methods are still being explored’ (Infrastructure and Projects Authority 2024 p.13).

Reforms supporting success – partnering with industry to deliver digital projects

Recognising the crucial role of technology vendors in delivering the Australian Government’s ambitions for digital transformation, the Digital and ICT Investment Oversight Framework includes ‘sourcing’ as an area of focus. As part of this, the DTA coordinates marketplaces and agreements designed to enable agencies to easily access technology goods and services to support their digital projects. In 2023–24, the Australian Government sourced more than $6.4 billion of digital products and services from industry via these marketplaces and agreements. By accessing these arrangements through the BuyICT platform, agencies benefited from the Australian Government’s collective buying power and strengthened terms and conditions.

The DTA’s latest ICT labour hire and professional services panel, the Digital Marketplace Panel 2, adopts the APS Career Pathfinder dataset and Skills Framework for the Information Age (SFIA) to classify ICT labour hire opportunities. The classification of roles and greater panel pricing transparency provides clearer signals for in-demand skills, their costs and potential shortages that will inform delivery capacity and confidence in digital projects. The top in-demand digital and ICT skills sourced by the APS include software engineer, solution architect and business analyst.

Case study: Tax and super

Australian Taxation Office: Creating secure data centres

At a glance

ato.gov.au

$369.7 million investment

Summary

The ATO Data Centre Transformation delivered modern, resilient and secure data centres that keep pace with technology, demand and community expectations, while keeping data secure.

The ATO undertook this once-in-a-generation infrastructure and data centre modernisation program to align with government directives and ensure the ongoing security and integrity of critical data stores.

The importance and complexity of the ATO’s role in the Australian economy means it must provide digital experiences and services that make it easy for the community to engage while also safeguarding taxpayer data.

This complex project overcame many challenges. Many data centre migrations of this size and complexity fail, leaving the organisation in a hybrid state with significant technical debt. The success of the ATO data centre program was largely due to the collective drive and commitment to overcome technical issues.

The outcome was to deliver the most significant technology transformation for the ATO in 30 years.

"As the Australian Government’s principal revenue collection agency, data underpins everything we do. Our data stores are growing every year, so keeping our systems safe and protecting the personal information entrusted to us by taxpayers is paramount."

Michael Rowell

ATO Deputy Commissioner and Senior Responsible Official

The outcomes delivered directly contributed to or created business benefits for the ATO and the broader community. These include fewer service interruptions so clients can access ATO services when they need to with greater confidence, as well as reduced national security risk for sensitive data.

Note: This project is not featured in this report as it closed before the start of public reporting in 2024. This project is, however, enabling delivery of current ATO projects and is included as an example for this reason.

Off