Employee-related outcomes

This section outlines the expectations and use of Microsoft 365 Copilot amongst trial participants including its use across the Microsoft 365 suite and identification of current, novel and future use cases.

Key insights

Most trial participants (77%) were satisfied with Copilot and wish to continue using the product.

The positive sentiment towards Copilot was not uniformly observed across all MS products or activities. In particular, MS Excel and Outlook Copilot functionality did not meet expectations.

Other generative AI tools may be more effective at meeting bespoke users’ needs than Copilot. In particular, Copilot was perceived to be less advanced in: writing and reviewing code, producing complex written documents, generating images for internal presentations, and searching research databases.

Despite the overall positive sentiment, the use of Copilot is moderate with only a third of post-use survey respondents using Copilot on a daily basis. Due to a combination of user capability, perceived benefit of the tool and convenience, and user interface, Copilot is yet to be ingrained in the daily habits of APS staff.

Copilot is currently used mainly for summarisation and re-writing content in Teams and Word.

There was a positive relationship between the provision of training and capability to use Copilot. Copilot training was most effective when tailored to the APS, the users’ role and the agency context.

There are opportunities to further enhance the use of generative AI across the policy lifecycle to increase adoption and benefits of generative AI.

The majority of trial participants are positive about Copilot

Most trial participants are optimistic about Copilot and wish to continue using Copilot.

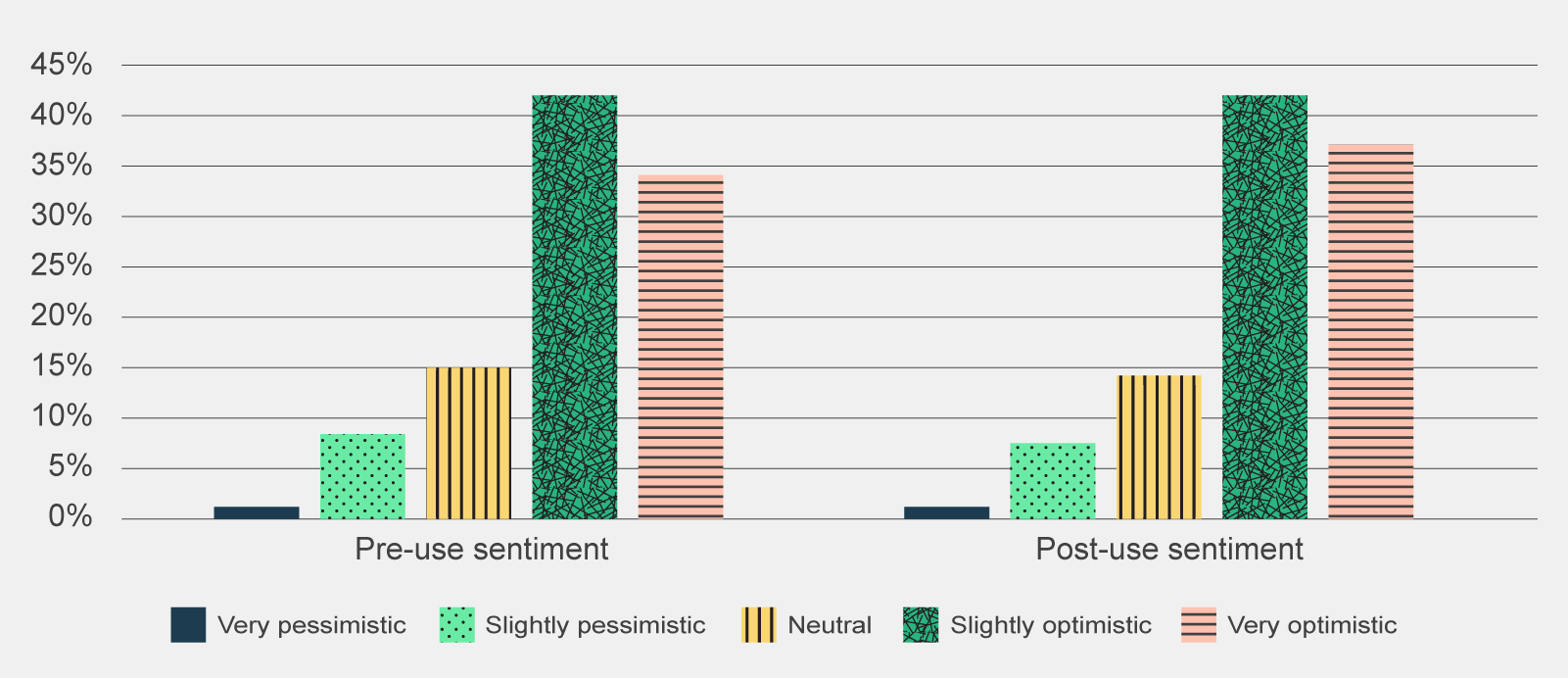

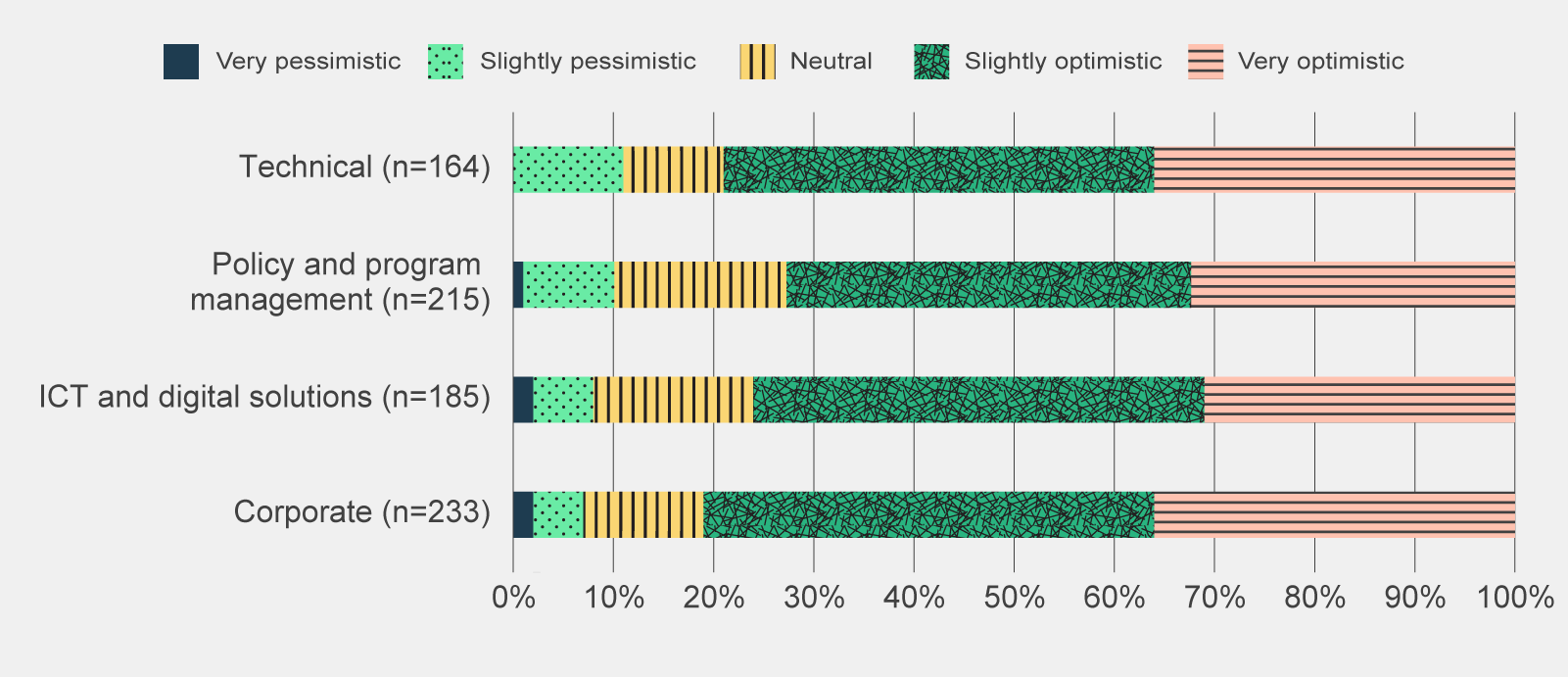

Trial participants had high expectations prior to the start of the trial. As shown in Figure 1, the majority of survey respondents (77%) who completed both the pre-use and post-use survey reported an optimistic opinion of Copilot. This indicates the initial high level of optimism held by trial participants have been largely met.

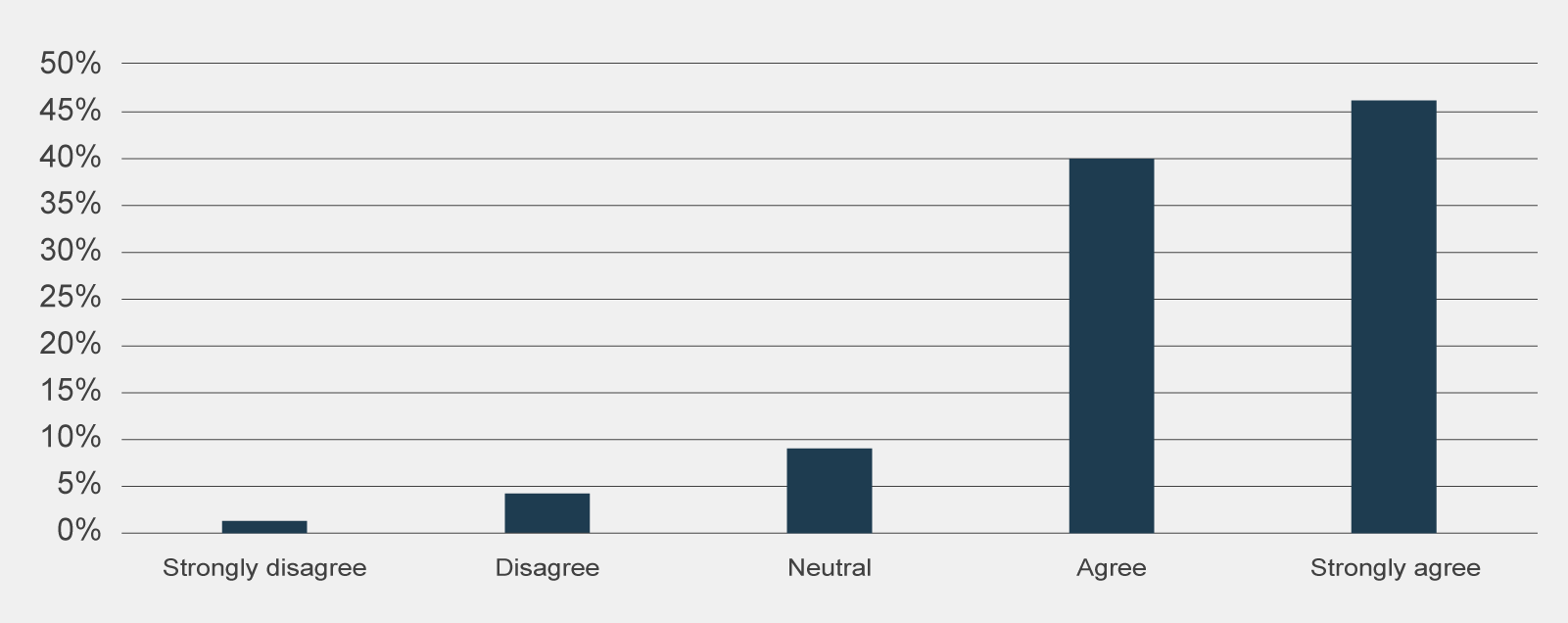

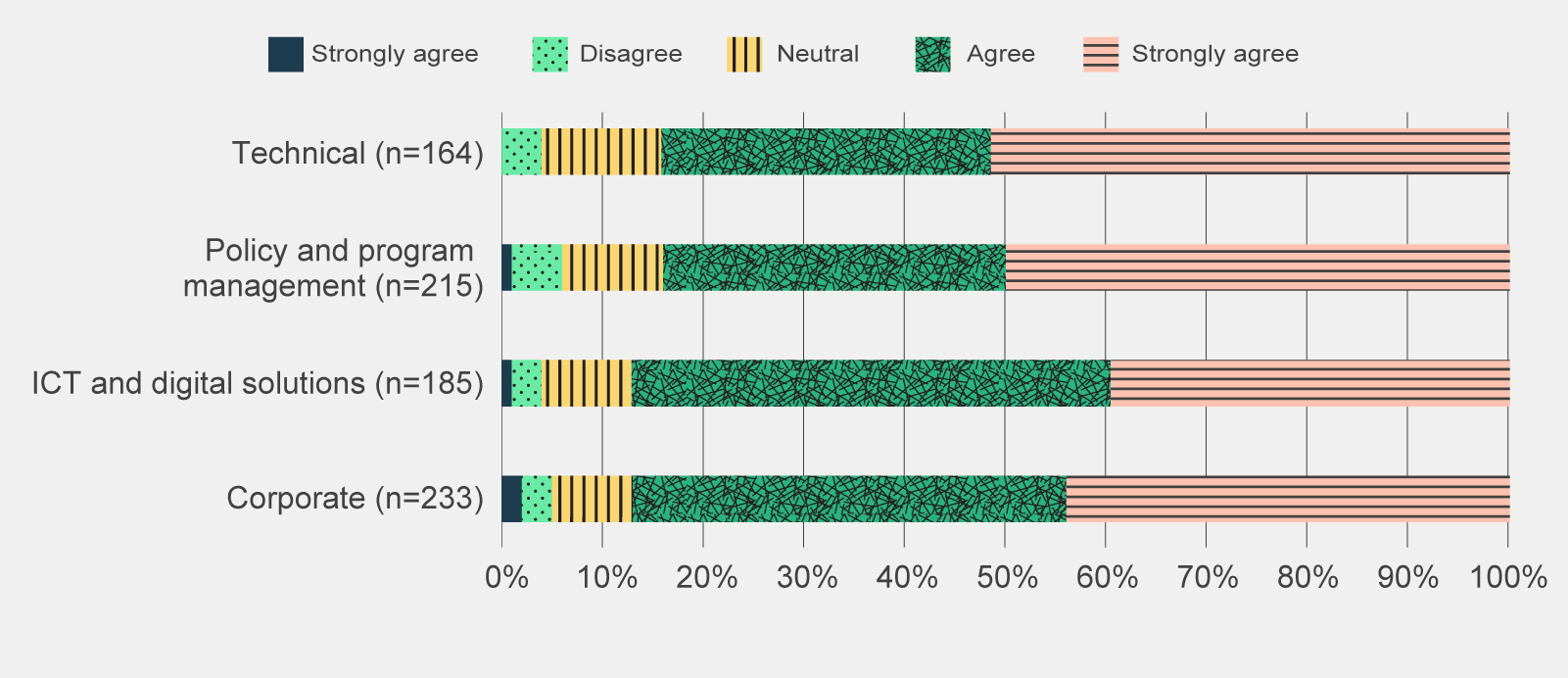

The positive sentiment was even greater when post-use survey respondents were asked if they wish to continue using Copilot after the trial. As shown in Figure 2, 86% agreed or strongly agreed that they want to keep using Copilot, with only 5% disagreeing, highlighting an overwhelming desire for survey respondents to continue using Copilot.

Survey respondents indicated their desire to continue using Copilot was due to its impact on productivity, praising its ability to summarise meetings and information, create a first draft of documents and support information searches. Trial participants who had a negative sentiment towards Copilot viewed that Copilot was a ‘time drain’ as additional effort was required to prepare the data (for prompting) and edit any outputs considered inaccurate or irrelevant.

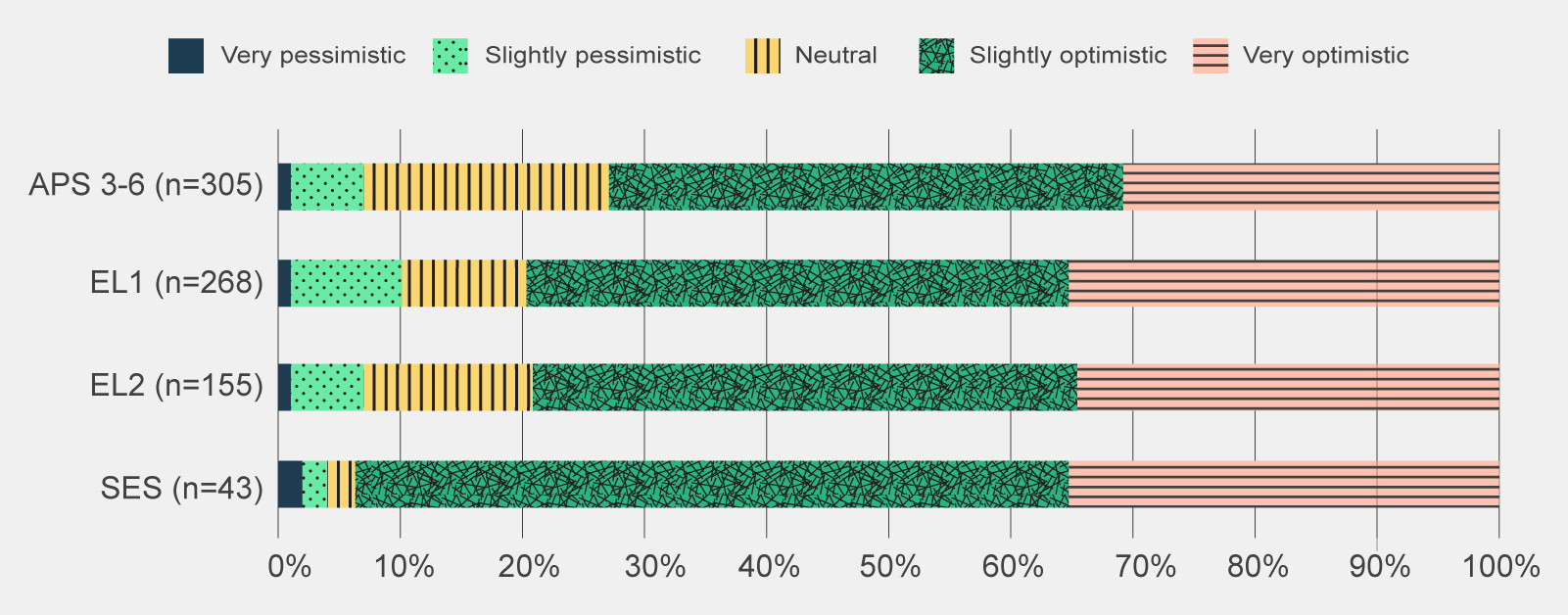

The majority of trial participants across all job families and classifications are positive about Copilot.

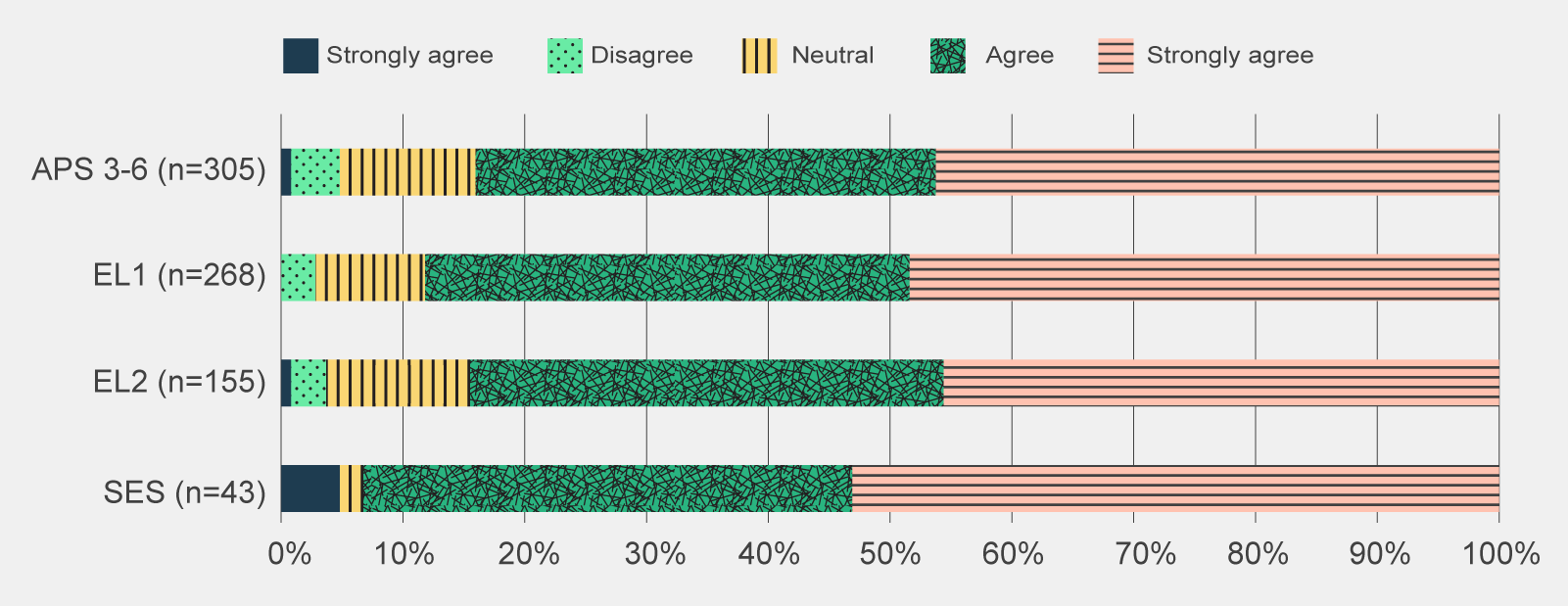

Across all job classifications and job families, the majority of trial participants want to continue to use Copilot. The overwhelming desire to continue using Copilot was also apparent in other agency evaluations: 96% of Department of Industry, Science and Resources (DISR) trial participants wanted to continue using the tool when asked in a mid-trial survey (Department of Industry, Science and Resources 2024).

As shown in Figure 4, SES had the highest proportion of trial participants (93%) wanting to continue to use Copilot. Note, due to the smaller sample size of SES respondents, there is greater uncertainty associated with this estimate and a margin of error at a 95% confidence level greater than 0.05.

SES survey respondents in the post-use survey mainly referenced productivity benefits, both for themselves and their team. SES noted that Copilot appeared to be driving a reduction in time staff spent in creating briefing materials and lifted the general professionalism of written documents. On an individual level, it was seen as a tool for saving time through summarising meetings, email conversations and documents. SES survey respondents also viewed the trial as a positive step for the APS in embracing new technology.

Of the job families, trial participants in the Corporate grouping were particularly positive about Copilot with 86% of post-use survey respondents indicating they would like to continue to use Copilot after the trial as indicated in Figure 6.

Trial participants, regardless of job family, ubiquitously praised Copilot for automating time-consuming menial tasks such as searching for information, composing emails or summarising long documents. In addition, trial participants also acknowledged it was a safer alternative than accessing other forms of AI.

The positive sentiment was not uniformly observed across all MS products or activities

There were mixed opinions on the usefulness and performance of Copilot across Microsoft applications.

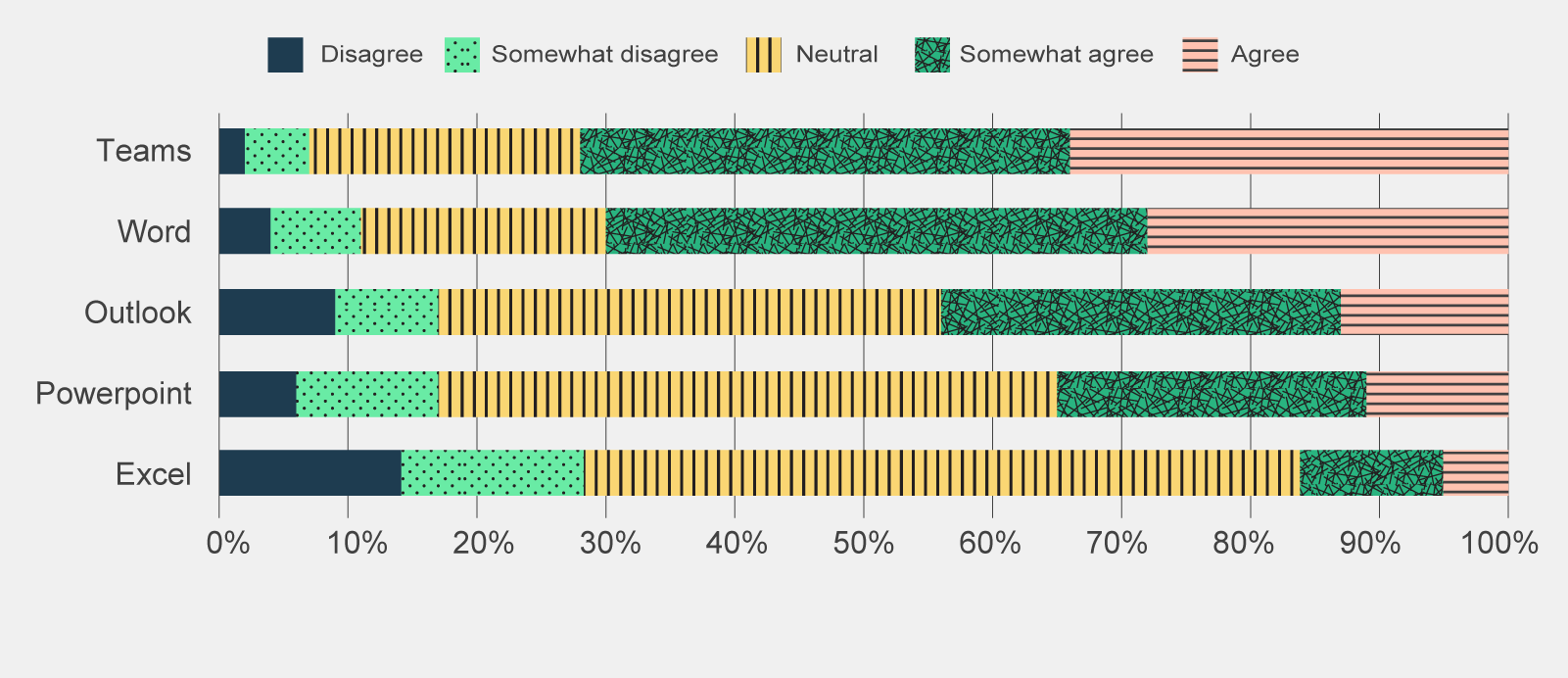

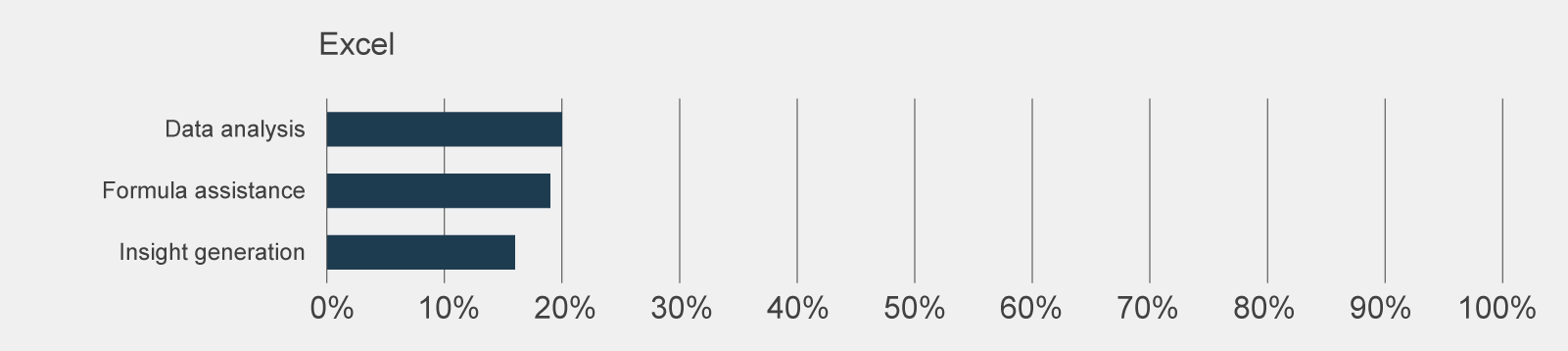

While the majority of pulse survey respondents were positive about Copilot’s functionality in Word and Teams, capabilities in other Microsoft products were viewed less favourably, in particular Excel. As shown in Figure 7, Excel had the largest proportion of negative sentiment with almost a third of respondents reporting that it did not meet their expectations.

Many focus group participants and post-use survey respondents found it difficult to prepare data in Excel for effective prompting, in particular the need for data to be structured in tables. In addition, focus group participants noted that Copilot often either could not complete the requested action or would not fully perform the function it was asked (for example only returning the suggested formula for users to enter rather than automatically performing the analysis).

There were also issues with accessing Copilot functionality in MS Outlook. Copilot features in Outlook were only available through either the newest Outlook desktop application or the web version of Copilot. Focus group participants noted that it was unlikely that trial participants had the newest versions of Outlook and were therefore unable to access Copilot features in Outlook. To work around this accessibility barrier, several post-use survey respondents and focus group participants noted they copied content from Outlook into Word or Teams to then prompt Copilot for assistance. The inability to access Copilot in Outlook likely weighed down participant sentiment for this product.

There has been a reduction in positive sentiment across all activities that trial participants had expected Copilot to assist with.

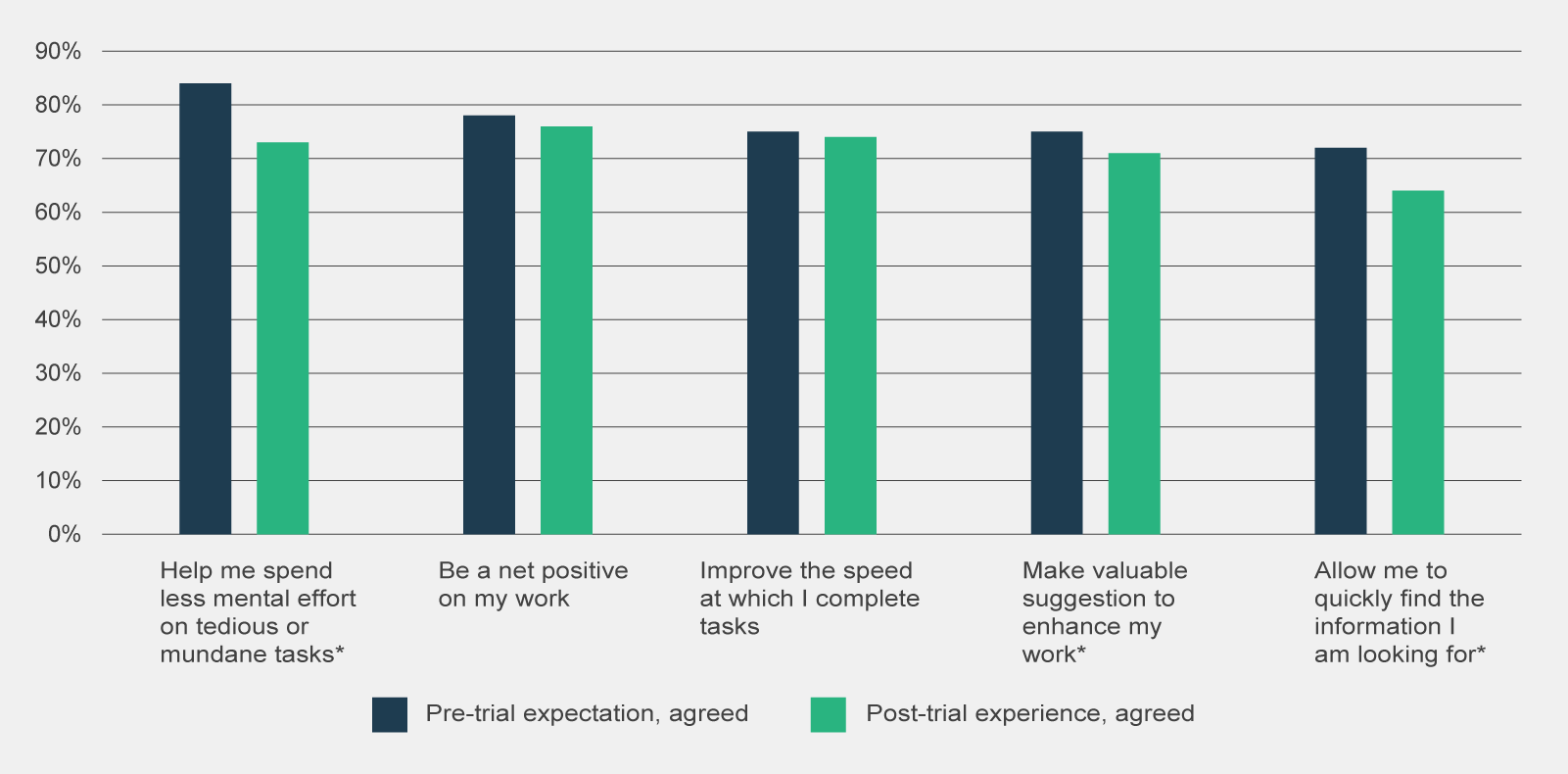

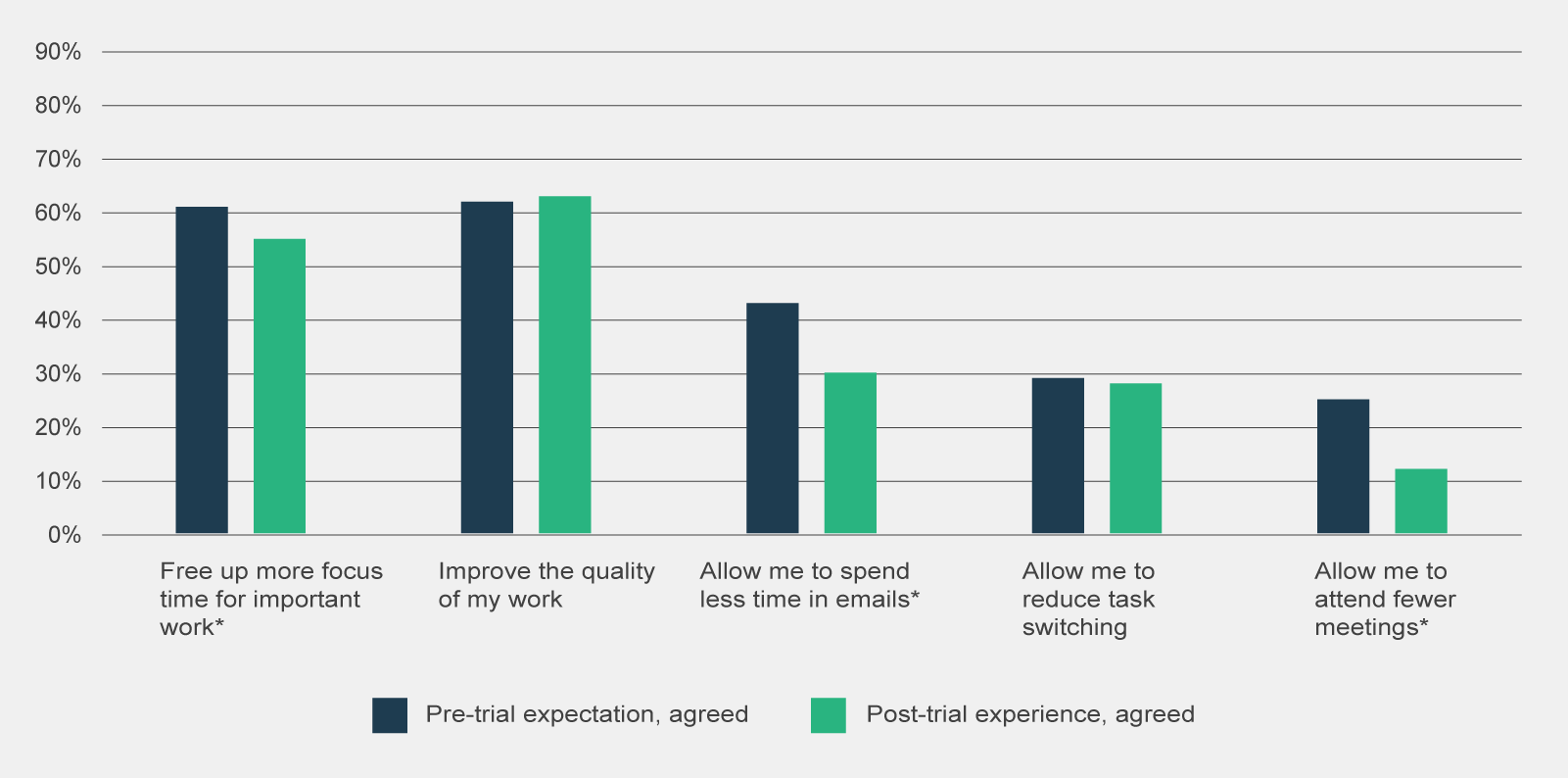

Trial participants who completed both the pre-use and post-use survey recorded a reduction in positive sentiment across most activities Copilot was expected to support. As shown in Figure 8, although the sentiment remains positive for most of these categories, their initial expectations of Copilot were unmet.

Figure 8 | Comparison of combined 'somewhat agree', 'agree' and 'strongly agree' responses to pre-use survey statement 'I believe Copilot will…' and post-use survey statement 'Using Copilot has…', by type of observation (n=330)

It is likely that trial participants expectations were heightened prior to the commencement of the trial. During consultations, it was noted that the features of Copilot (and generative AI more broadly) were marketed as being able to significantly save participants’ time, thereby heightening participants expectations. These expectations appear to have been tempered following Copilot’s use.

Of note, the most significant reductions in positive sentiment were observed in activities where survey respondents had the lowest expectations. There was a 32% decrease in the positive belief that Copilot allowed participants to ‘spend less time in emails’ and a 54% reduction in the belief that it would allow them to ‘attend fewer meetings.’ Even with low expectations, survey respondents did not perceive Copilot was able to assist in these activities.

Other generative AI tools may be more effective at meeting bespoke users’ needs than Copilot

The small proportion of trial participants who use other generative AI products in a work capacity found those tools met their needs slightly better than Copilot.

Of the post-use survey respondents, 16% reported that they use other generative AI products to support their role. Post-use survey respondents reported using the following tools:

- Versatile LLMs – ChatGPT, Gemini, Claude, Amazon Q, Meta AI

- Development tools – Github Copilot, Azure

- Image generators/editors – Midjourney, Dall-E 2, Adobe, Canva

As shown in Table 2, 44% of post-use respondents who used other generative AI tools viewed that other tools met their needs more than Copilot.

| Sentiment | Response (%) |

|---|---|

| Other generative AI products meet my needs significantly more than Copilot | 13% |

| Other generative AI products meet my needs slightly more than Copilot | 31% |

| Copilot and other generative AI products meet my needs to the same extent | 32% |

| Copilot meets my needs slightly more than other generative AI products | 13% |

| Copilot meets my needs significantly more than other generative AI products | 11% |

Copilot offers general functionality, matching most features of other publicly available generative AI products, but it may not offer an equivalent level of sophistication or depth across all these features. Post-use survey respondents noted that generative AI products were used for discrete use cases where Copilot was considered less advanced such as: writing and reviewing code, producing more complex written documents, generating images for internal presentations and searching research databases.

Despite the positive sentiment, the actual use of Copilot is moderate

A third of post-use survey respondents used Copilot daily.

While survey respondents were generally positive towards Copilot, the majority of survey respondents did not frequently use Copilot. As shown in Table 3, only a third of post-use survey respondents used Copilot on a daily basis.

| How frequently did you use Copilot for Microsoft 365 during the trial? | Responses (%) |

|---|---|

| Not at all | 1% |

| A few times a month | 21% |

| A few times a week | 46% |

| A few times a day | 26% |

| Most of the day | 6% |

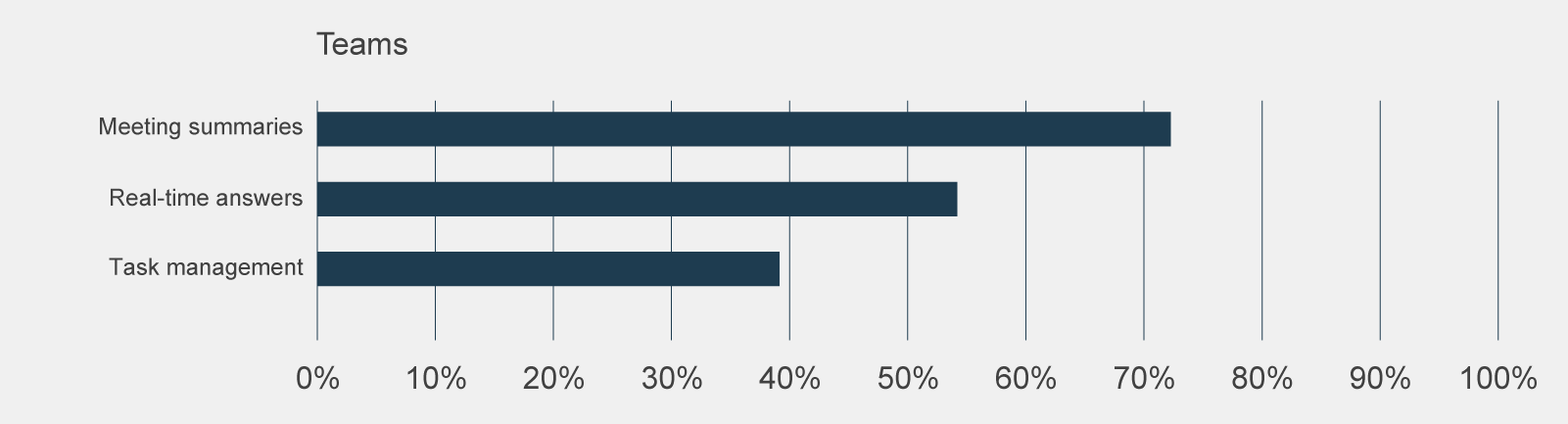

The moderate frequency of usage was broadly consistent across APS classifications and job families. There was variance, however, across cohorts relating to the features used. For example, a higher proportion of SES and EL2s used the Teams meeting summarisation compared to other APS classifications. This could reflect the higher number of meetings these cohorts needed to attend, and therefore the higher usage and potential benefits in meeting summaries for the SES and EL2 classifications.

Post-use survey respondents who only used Copilot a few times a month highlighted that they stopped using Copilot because they had a poor first experience with the tool or that it took more time to verify and edit outputs than it would take to create them otherwise. Similarly, other post-use survey respondents remarked they did not feel confident using the tool and couldn’t find time for training among other work commitments and time pressures.

Focus groups with trial participants also remarked that they often forgot Copilot was embedded into Microsoft 365 applications as it was not obviously apparent in the user interface. Consequently they neglected to use features, including forgetting to record meetings for transcription and summarisation. Commonwealth Scientific and Industrial Research Organisation (CSIRO) identified through internal research with CSIRO trial participants that the user interface at times made it difficult to find features (CSIRO 2024:28). Given one of the arguable advantages of Copilot is its current integration with existing MS workflows, its reported lack of visibility amongst users largely diminishes its greatest value-add.

Overall, the usage of Copilot is in its infancy within the APS. Due to a combination of user capability, user interface, perceived benefit of the tool and convenience, Copilot is yet to be ingrained in the daily habits of APS staff.

Copilot was predominantly used to summarise and re-write content

Copilot use is concentrated in MS Teams and Word.

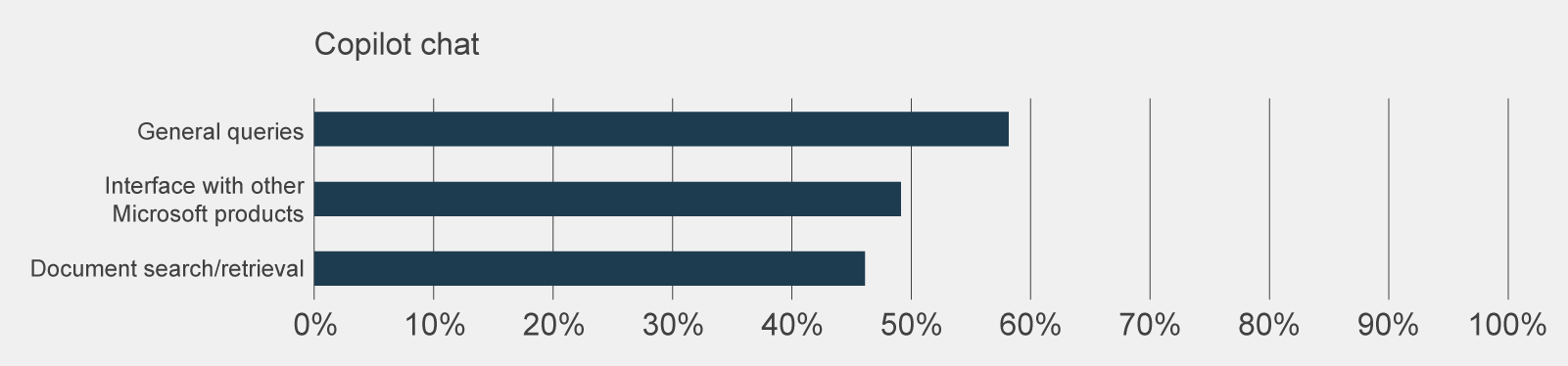

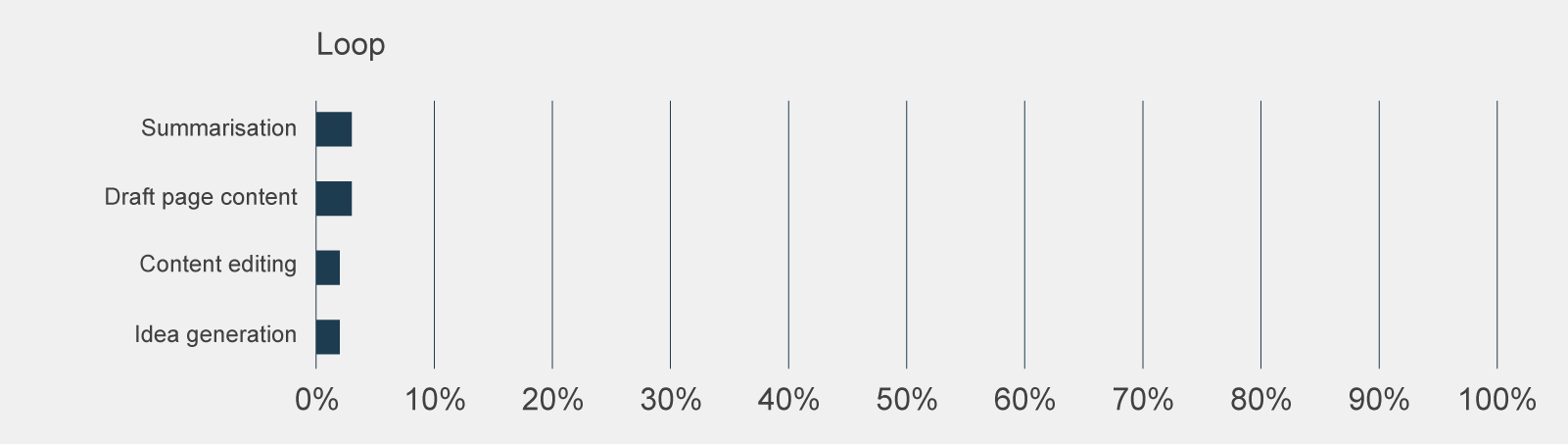

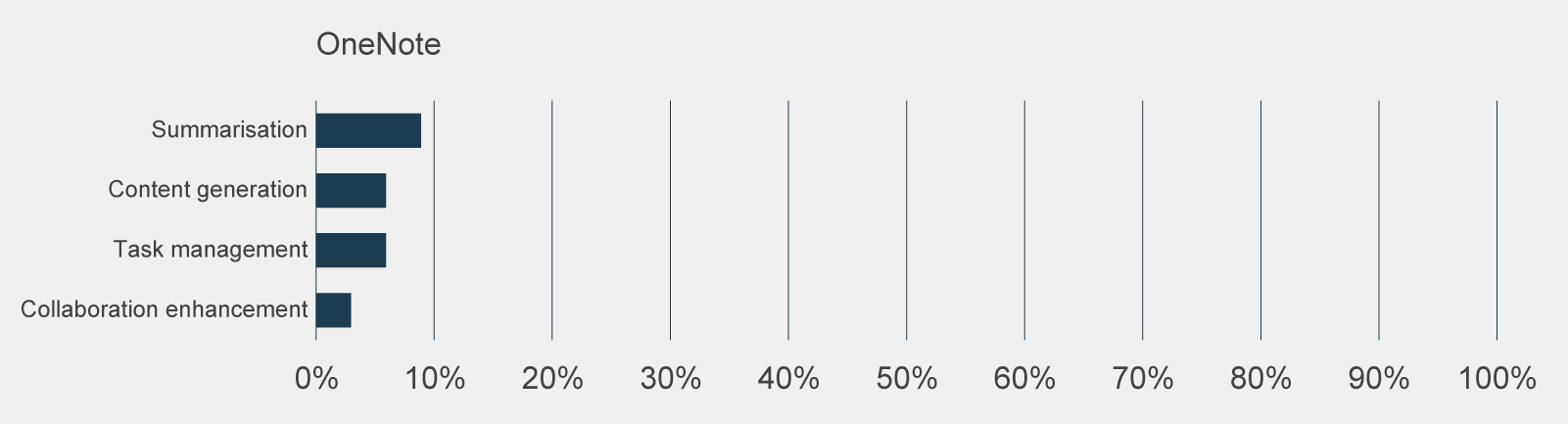

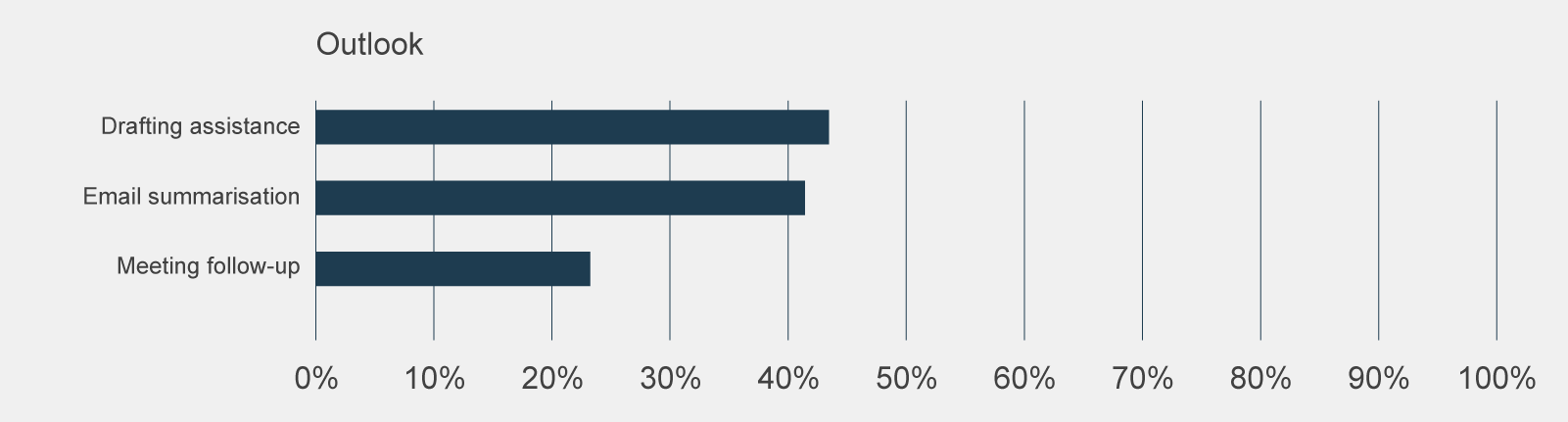

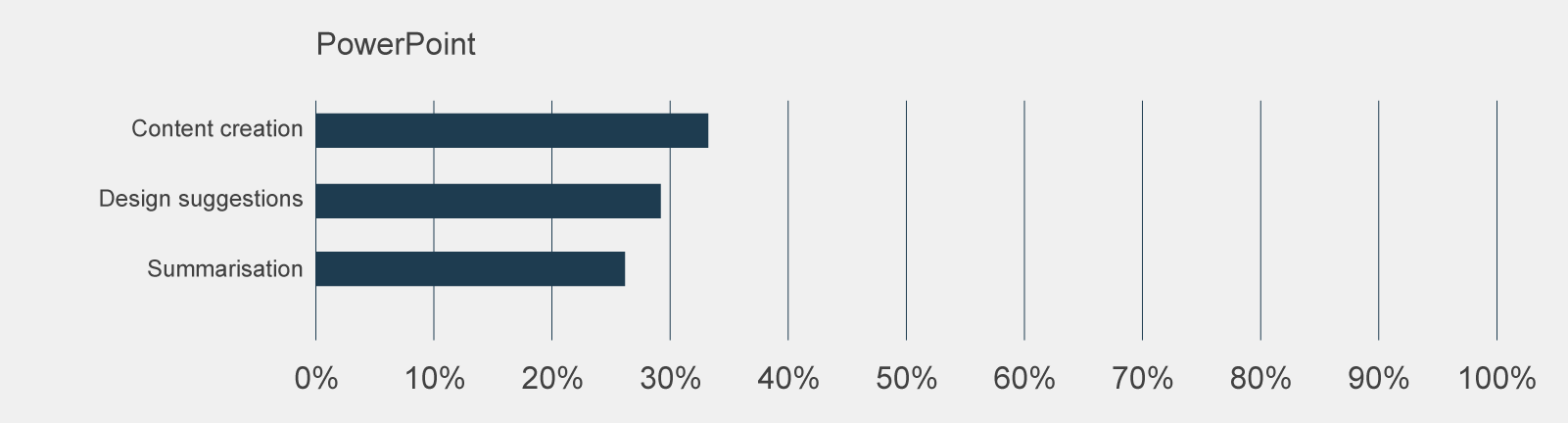

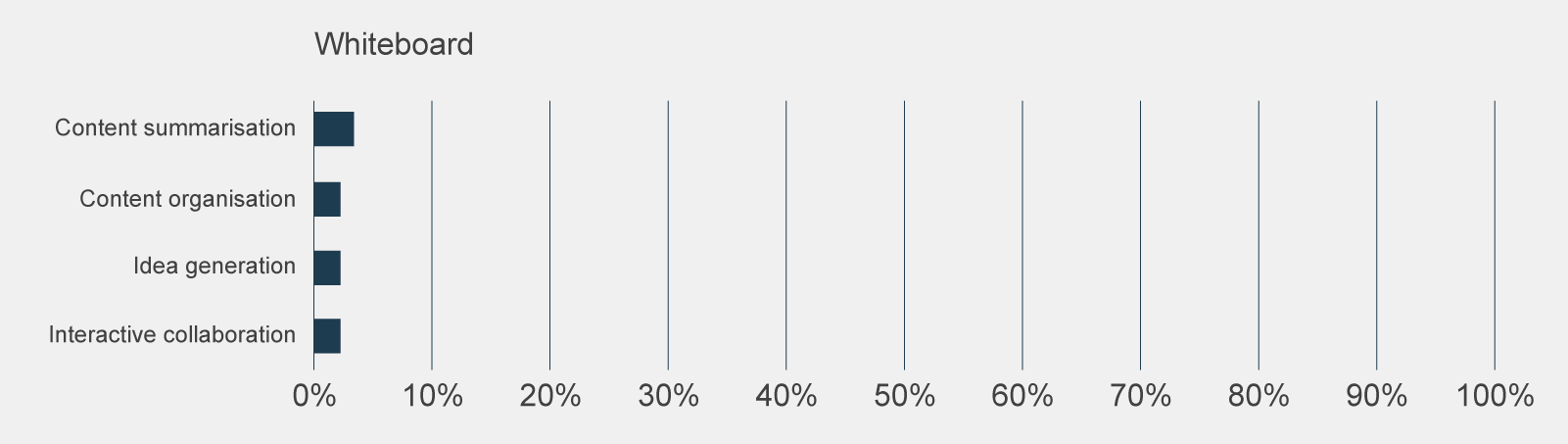

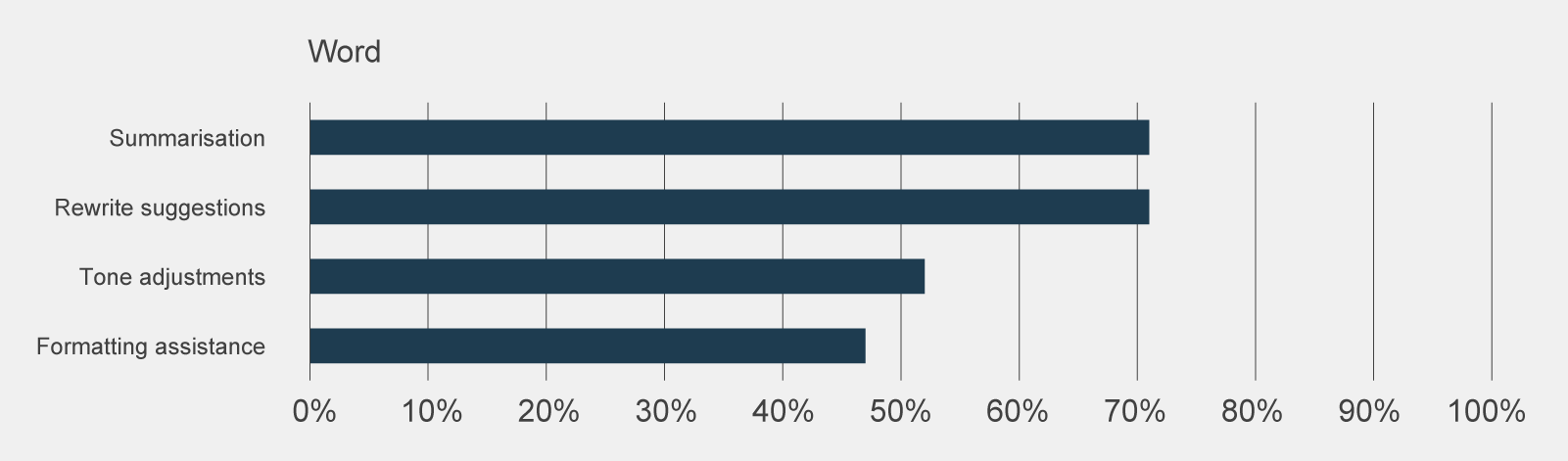

While Copilot provides support in a range of activities, the use of Copilot amongst trial participants was concentrated in a few activities. As shown in Figure 9, over 70% of post-use survey respondents used Copilot in Teams to summarise meetings, and in Word to summarise documents or to re-write content.

Figure 9 | Post-use survey responses, grouped by Microsoft application, to 'How frequently did you use the following features?' of ‘a few times a month’ or more frequent

The main use cases are an illustration of the current perceived strengths of Copilot’s predictive engine - it is adept at natural language processing and synthesis, where it can output human-like text in response to provided information and prompts.

Of note, the use of Copilot within Whiteboard and Loop were particularly low. This is not unsurprising when compared with the usage trends before the trial. It is also interesting to note the relatively lower use of Copilot to create content and ideas relative to summarisation of information.

There was a positive relationship between the provision of training and capability to use Copilot.

There was no standard approach undertaken to train trial participants on how to use Copilot. Participants adopted a combination of methods based on their perceived capability and resources provided to them. The 4 main training options available to trial participants were:

- accessing Copilot resources on the Internet

- hands-on experimentation with Copilot

- attending agency-facilitated Copilot training

- attending Microsoft-led Copilot training.

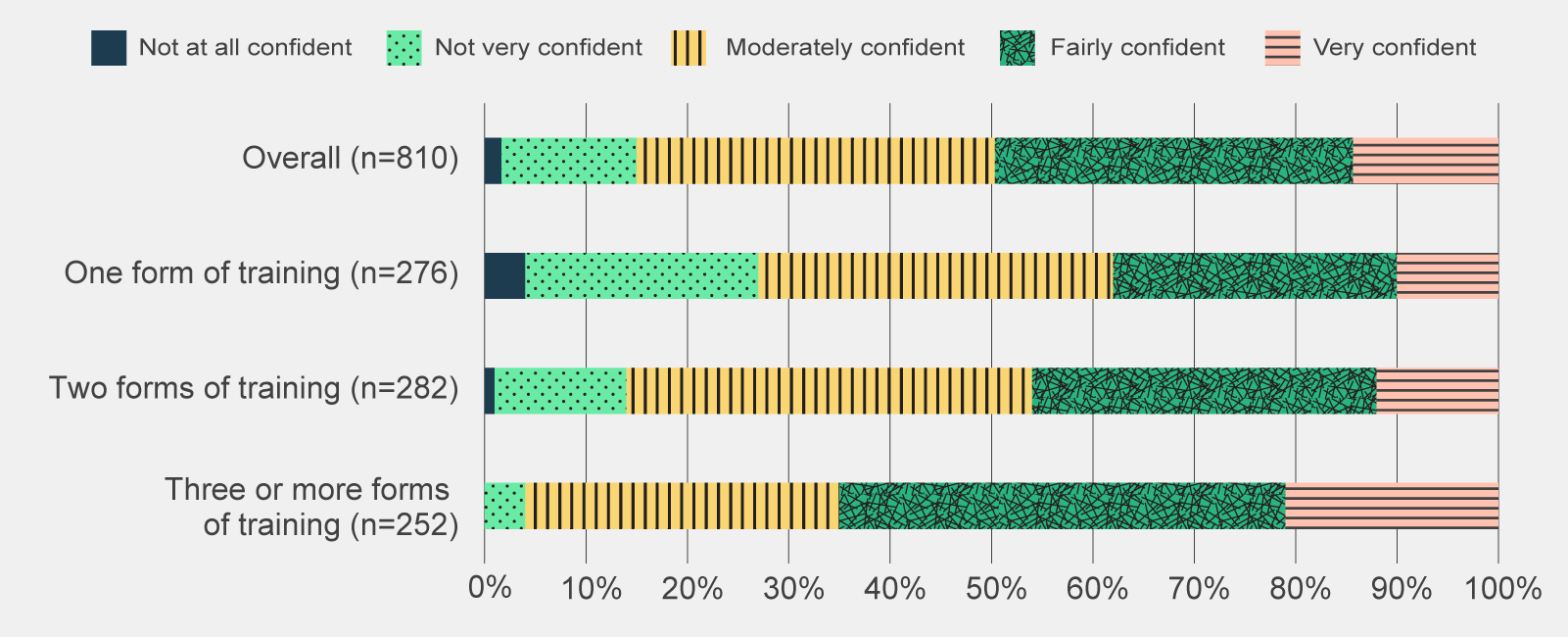

Almost half of all post-use survey respondents felt fairly or very confident in their skills and abilities to use Copilot. As depicted in Figure 10, the proportion of ‘fairly’ and ‘very confident’ responses combined is 16 percentage points higher when post-use survey respondents accessed 3 or more forms of training compared to overall. This indicates a positive correlation between the amount of training participants received and their ability to use Copilot. The importance of training was also highlighted in the CSIRO’s evaluation which reported that trial participants needed additional training and/or resources that would support advanced features and usage (CSIRO 2024:11).

The general sentiment among focus group participants who were involved in their agency’s implementation (namely chief technology officers (CTOs)/chief information officers (CIOs) and Copilot Champions) was that training requirements were greater than anticipated.

Complicating this is the diverse digital literacy and maturity of staff. Some agencies were better positioned to manage this than others but the positive relationship between perceived capability and amount of training suggests that a concerted, material and ongoing effort is needed to build confidence. A one-off session is unlikely to have a lasting impact on a user’s skills and abilities.

Trial participants broadly found Copilot training useful but noted areas for improvement.

The majority of post-use survey respondents (76%) who attended either agency or Microsoft-led training found the sessions useful. Anecdotal evidence from focus groups, however, suggested that more could be done to personalise training, particularly the training delivered by Microsoft.

Focus group participants believed that Microsoft training was too focused on the features of Copilot, rather than its applications and use cases. Participants also noted that some Microsoft trainers did not understand the APS context and could not answer targeted questions.

To supplement Microsoft-led sessions, almost all agencies that participated in the evaluation offered some form of training. The quality and exhaustiveness of this training, however, varied according to the time and resource constraints of agencies. Some focus group participants had dedicated resources to lead the training effort, while others were encouraged to learn Copilot through hands-on use.

Training was most effective when tailored to APS and agency context.

One focus group participant found Microsoft’s industry specific advice and prompt library a useful aid to upskilling, others expressed a similar desire for cheat sheets with tailored prompts aligned to their roles. Several focus group participants remarked that they gained the most knowledge on impactful use cases through forums their agency created, such as ‘lunch and learns’, webinars, ‘promptathons’ or similar.

A more flexible, community of practice approach was also seen as an effective training method as it provided a means to identify and propagate highly relevant use cases for Copilot. In general, there appears to be a strong demand for training even amongst trial participants who had a high proportion of individuals who were already experienced in generative AI. This included a desire for a wide range of training and information sources supplemented by opportunities to share use cases and broad skills in generative AI.

There are opportunities to further explore use cases in the APS

There were a few novel use cases for Copilot in the APS.

Some trial participants identified a few novel use cases which were highly specific to the roles of participants but highlight the potential of Copilot to support higher order and more bespoke activities. These included:

- Writing and reviewing PowerShell script (for task automation)

- Assessing documents against a rubric or criteria

- Converting technical documentation into plain language (to distribute to a broader audience)

- Drafting content for internal exercises e.g. phishing simulations

- Drafting content for business cases and Cabinet Submissions

- Converting information into standard forms and templates (for processing and assessment).

The presence of novel use cases highlights that there are opportunities to innovatively use Copilot beyond its summarisation, information search and content drafting features.

References

- Commonwealth Scientific and Industrial Research Organisation (2024) ‘Copilot for Microsoft 365; Data and Insights’, Commonwealth Scientific and Industrial Research Organisation, Canberra, ACT, 28.

- Department of Industry, Science and Resources (2024) ‘DISR Internal Mid-Trial Survey Insights’, Department of Industry, Science and Resources, Canberra, ACT, 2.