-

Access points

Access points are the online entry points or ‘front doors’ where users go to find and interact with government digital services. Access points for digital services typically take the form of:

- informational websites

- web applications accessed from a web browser

- online portals

- mobile apps.

-

Note: there are currently no open consultations.

-

This website is released in beta format for viewing by the public and is still undergoing testing prior to its official release. Changes may be made to the content of this website at any time. The user acknowledges and accepts that this website is still in its testing phase and is provided on an “as is” and “as available” basis and may contain defects. The Commonwealth accepts no legal liability whatsoever arising from, or connected to, the publication of this website as a beta website or otherwise.

-

Digital Experience Policy & Standards

Improving the experience for people and businesses interacting digitally with government information and services.

-

DXP Timeline

-

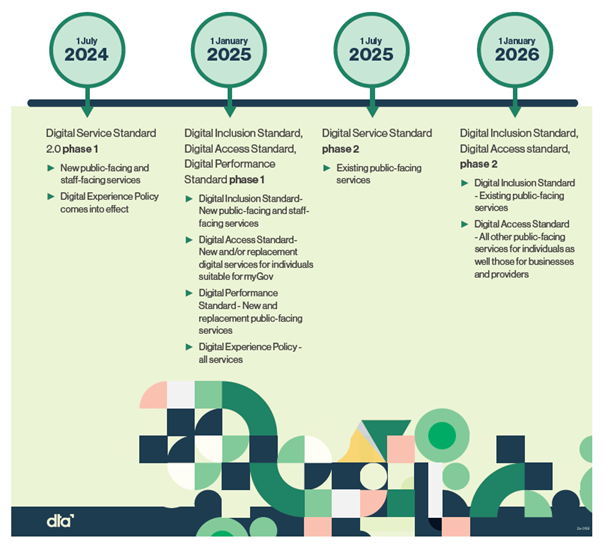

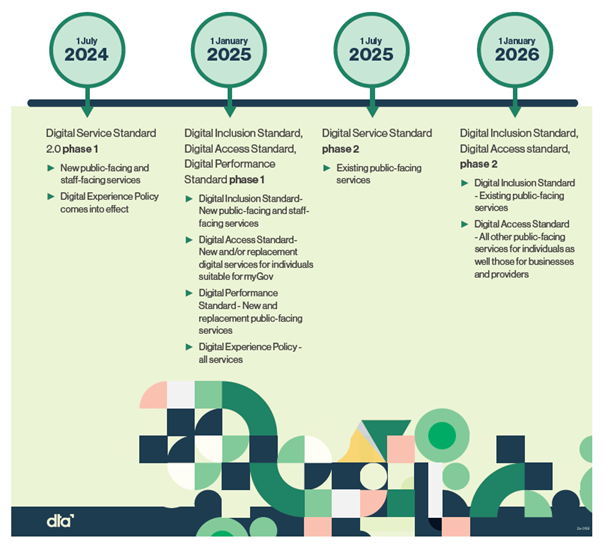

Digital Experience Policy Timeline

-

Digital Experience Policy Timeline

-

Downloadable Resources

-

Phase 1: 1 January 2024

Digital Service Standard 2.0 phase 1

New public-facing and staff-facing services

-

-

Digital Service Standard 2.0 phase 1

New public-facing and staff-facing services

-

Digital Experience Policy, Digital Inclusion Standard, Digital Access Standard, Digital Performance Standard phase 1

- Digital Inclusion Standard – New public-facing and staff-facing services

- Digital Access Standard – New and/or replacement digital services for individuals suitable for myGov

- Digital Performance Standard – New and replacement public-facing services

- Digital Experience Policy all services

-

Digital Service Standard phase 2

- Existing public-facing services

-

Digital Inclusion Standard, Digital Access standard, phase 2

- Digital Inclusion Standard – Existing public-facing services

- Digital Access Standard – All other public-facing services for individuals as well those for businesses and providers.

-

-

Phase 2: 1 January 2025

Digital Experience Policy, Digital Inclusion Standard, Digital Access Standard, Digital Performance Standard phase 1

- Digital Inclusion Standard – New public-facing and staff-facing services

- Digital Access Standard – New and/or replacement digital services for individuals suitable for myGov

- Digital Performance Standard – New and replacement public-facing services

- Digital Experience Policy all services

-

Phase 3: 1 July 2025

Digital Service Standard phase 2

- Existing public-facing services

-

The GovERP reuse assessment

The GovERP reuse assessment is part of the new APS ERP approach. The government commissioned report contains 5 key observations and recommendations for reuse.

-

Digital Experience Policy Timeline

-

Phase 4: 1 January 2026

Digital Inclusion Standard, Digital Access standard, phase 2

- Digital Inclusion Standard – Existing public-facing services

- Digital Access Standard – All other public-facing services for individuals as well those for businesses and providers.

-

Image description

The timeline image shows four stages of implementation for the Digital Experience Policy based on date.

Stage 1 is 1 July 2024: Digital Service Standard phase 1:

- New public-facing and staff-facing services.

Stage 2 is 1 January 2025: Digital Experience Policy, Digital Inclusion Standard, Digital Access Standard, and the Digital Performance Standard phase 1:

- Digital Inclusion Standard – new public-facing and staff-facing services

- Digital Access Standard – new and/or replacement digital services for individuals suitable for myGov

- Digital Performance Standard – new and replacement public-facing services

- Digital Experience Policy – all services.

Stage 3 is 1 July 2025: Digital Service Standard phase 2:

- Existing public-facing services.

Stage 4 is 1 January 2026: Digital Inclusion Standard, Digital Access Standard phase 2:

- Digital Inclusion Standard – existing public-facing services

- Digital Access Standard – all other public-facing services for individuals as well as those for businesses and providers.

-

-

-

Digital Experience Policy video

-

The GovERP reuse assessment is part of the new APS ERP approach. The government commissioned report contains 5 key observations and recommendations for reuse.

Connect with the digital community

Share, build or learn digital experience and skills with training and events, and collaborate with peers across government.